This is the multi-page printable view of this section. Click here to print.

Cluster management

- 1: Overview

- 2: Upgrade cluster

- 2.1: Upgrade Overview

- 2.2: Upgrade Bare Metal cluster

- 2.3: Upgrade vSphere, CloudStack, Nutanix, or Snow cluster

- 2.4: Upgrade airgapped cluster

- 2.5: Upgrade management components

- 2.6:

- 2.7:

- 3: Scale cluster

- 3.1: Scale Bare Metal cluster

- 3.2: Scale CloudStack cluster

- 3.3: Scale Nutanix cluster

- 3.4: Scale vSphere cluster

- 4: Nodes

- 5: Networking

- 5.1: Secure connectivity with CNI and Network Policy

- 5.2: Replace EKS Anywhere Cilium with a custom CNI

- 5.3: Multus CNI plugin configuration

- 6: Storage

- 6.1: vSphere storage

- 7: Security

- 7.1: Security best practices

- 7.2: Authenticate cluster with AWS IAM Authenticator

- 7.3: CIS Self-Assessment Guide

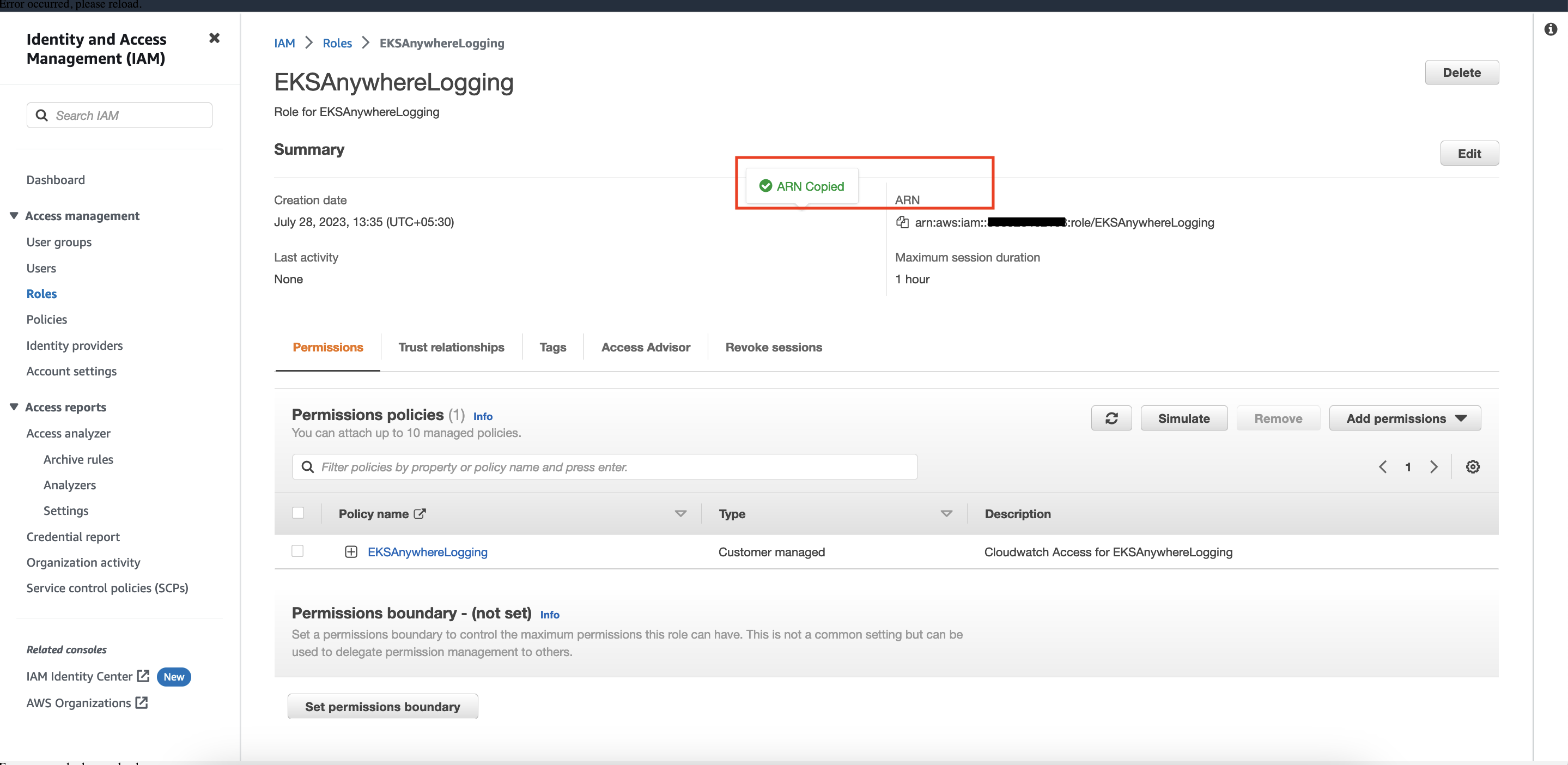

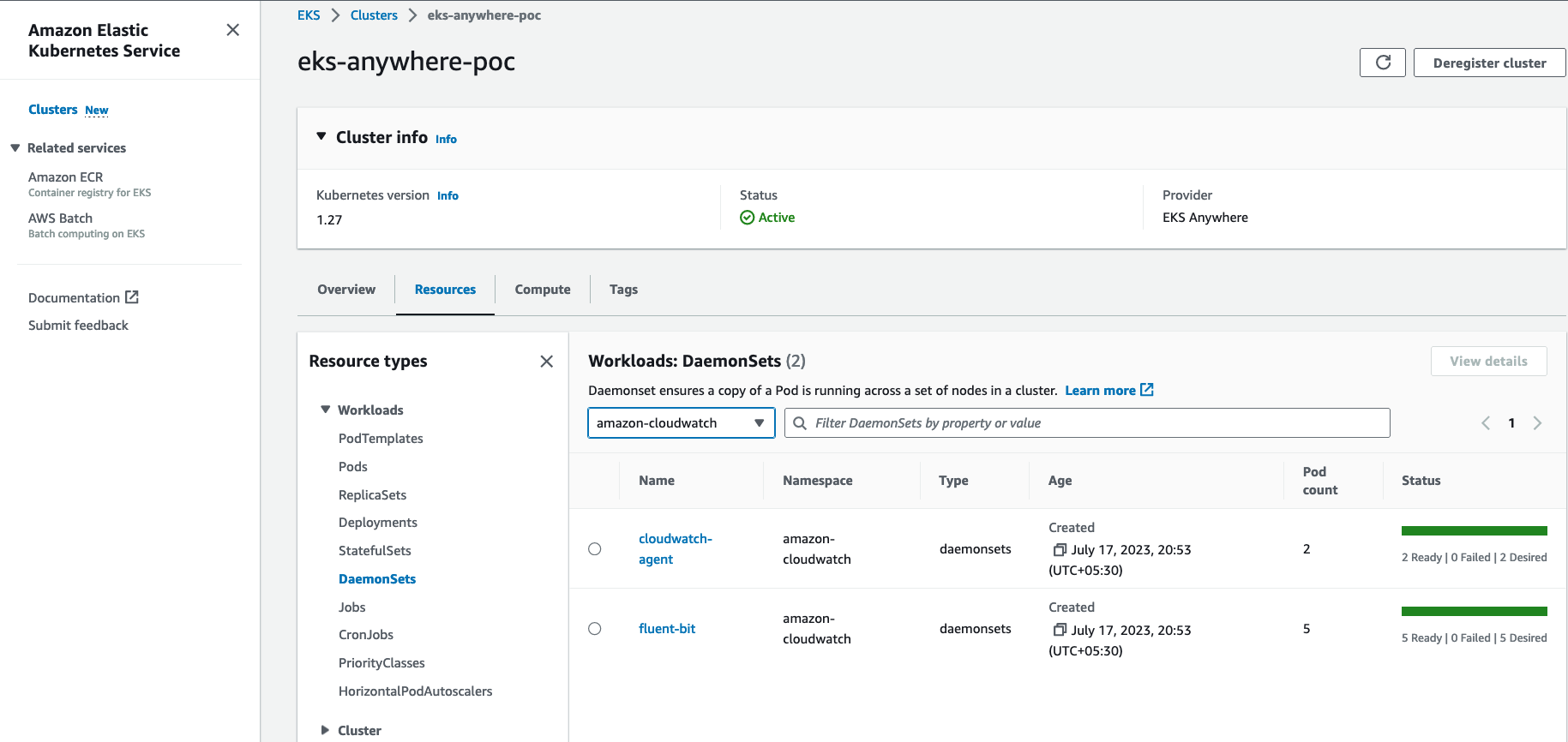

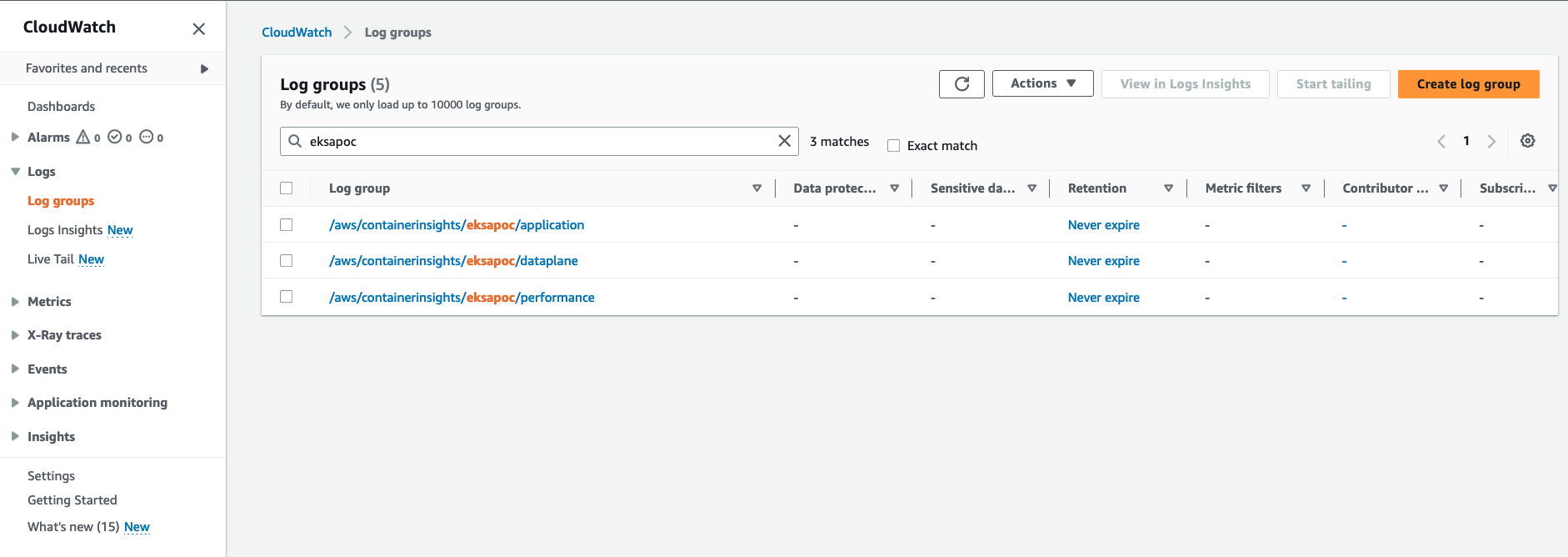

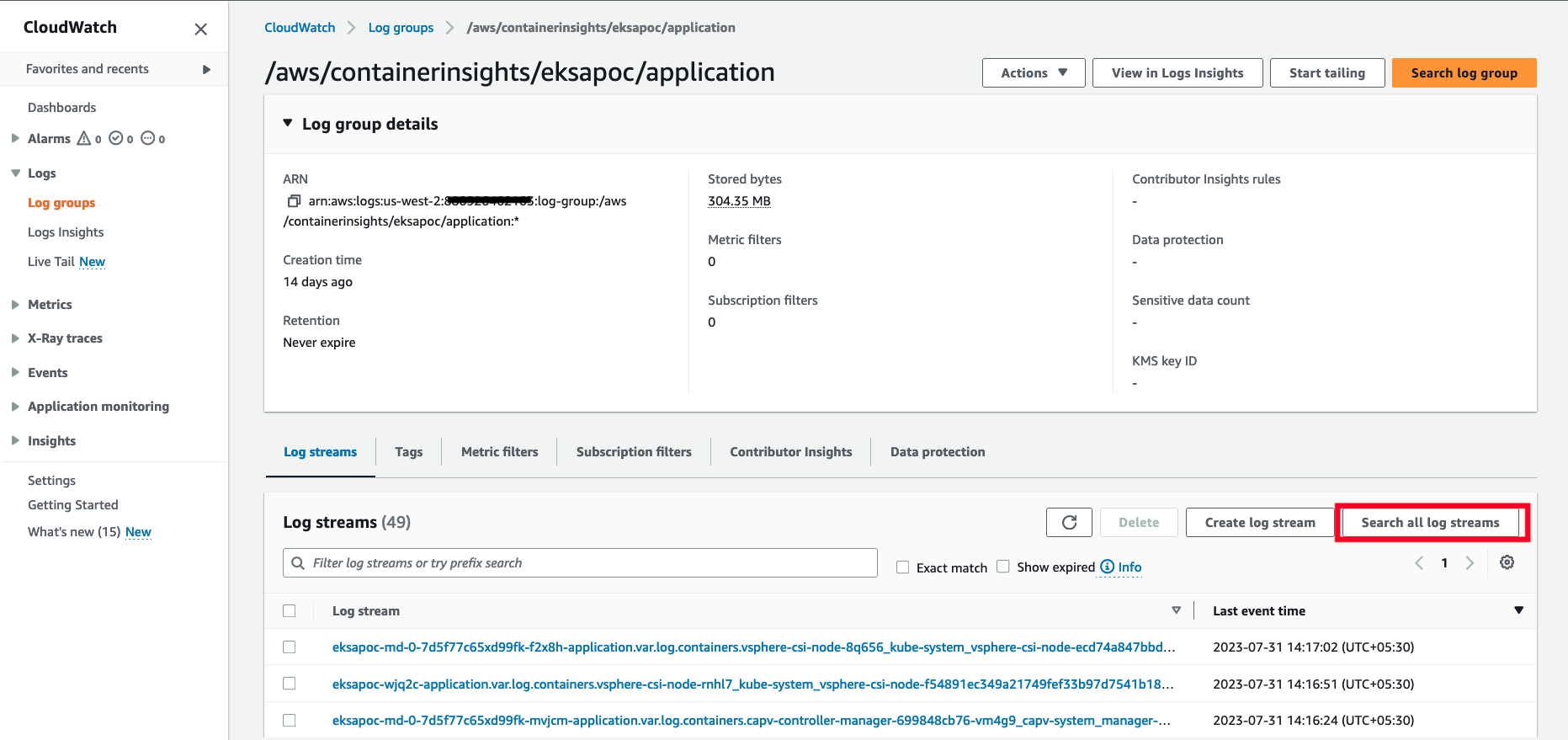

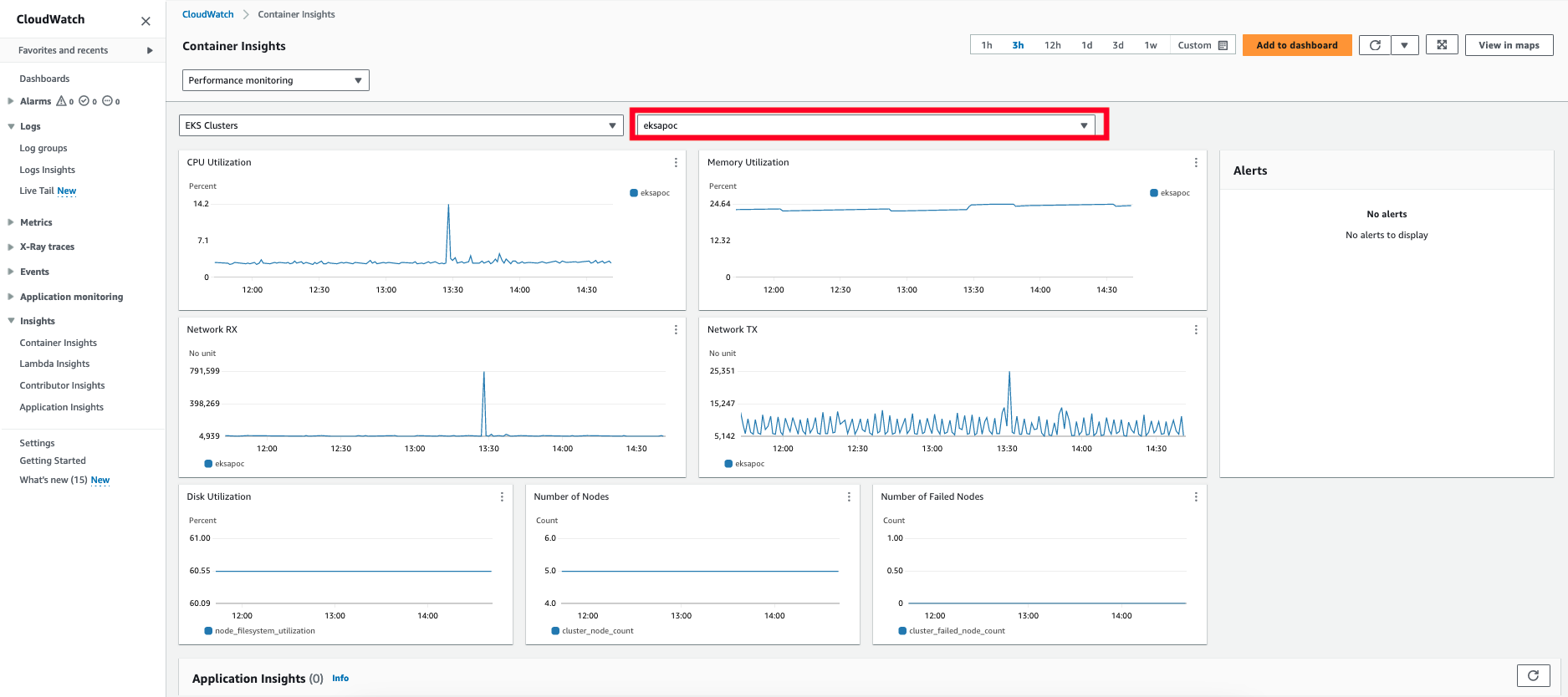

- 8: Observability in EKS Anywhere

- 8.1: Overview

- 8.2: Verify EKS Anywhere cluster status

- 8.3: Connect EKS Anywhere clusters to the EKS console

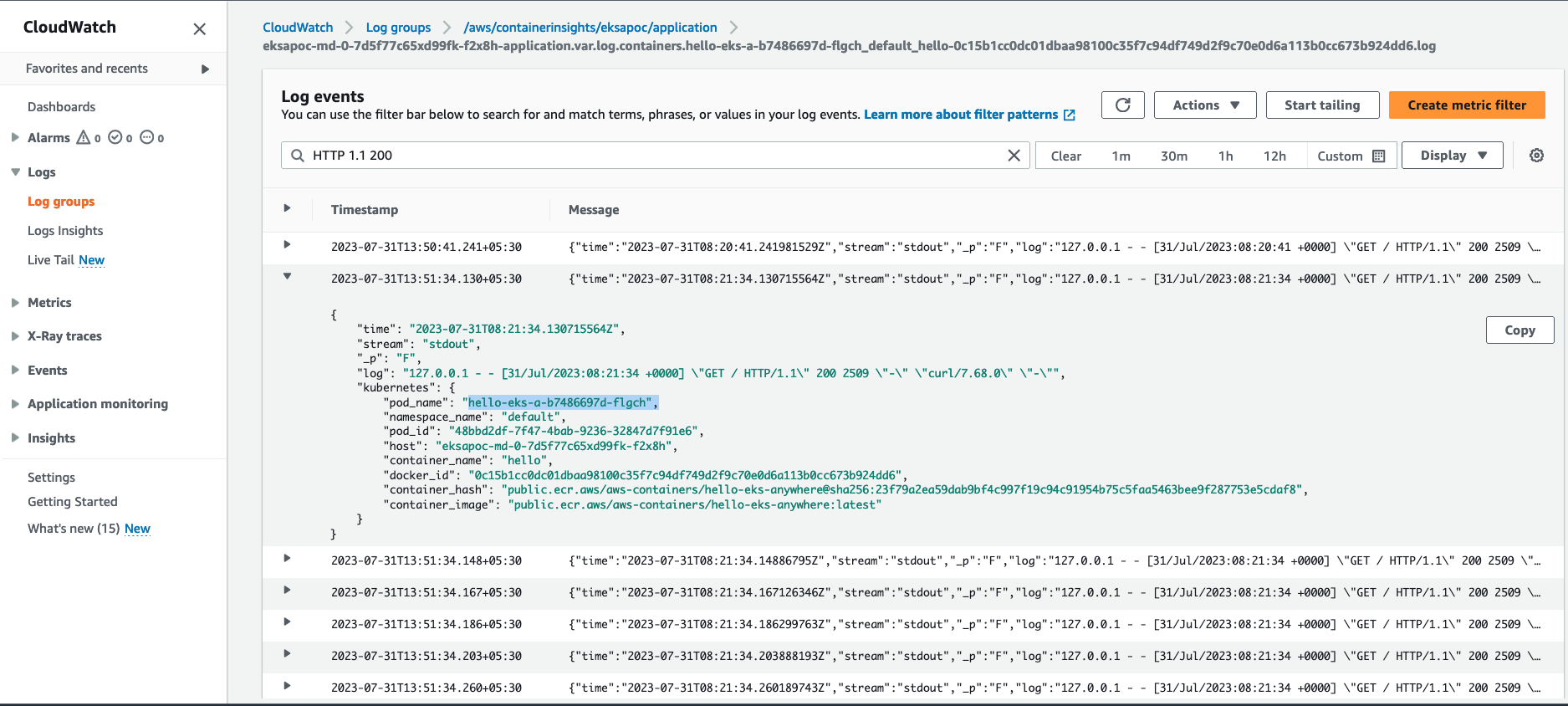

- 8.4: Configure Fluent Bit for CloudWatch

- 8.5: Expose metrics for EKS Anywhere components

- 9: Backup and restore cluster

- 9.1: Backup cluster

- 9.2: Restore cluster

- 10: Certificate management

- 11: etcd backup and restore

- 11.1: External etcd backup and restore

- 11.2: Bottlerocket

- 11.3: Ubuntu and RHEL

- 12: Support

- 12.1: Purchase EKS Anywhere Enterprise Subscriptions

- 12.2: License EKS Anywhere cluster

- 12.3: Share access to EKS Anywhere Curated Packages

- 12.4: Generate an EKS Anywhere support bundle

- 12.5:

- 12.6:

- 12.7:

- 12.8:

- 12.9:

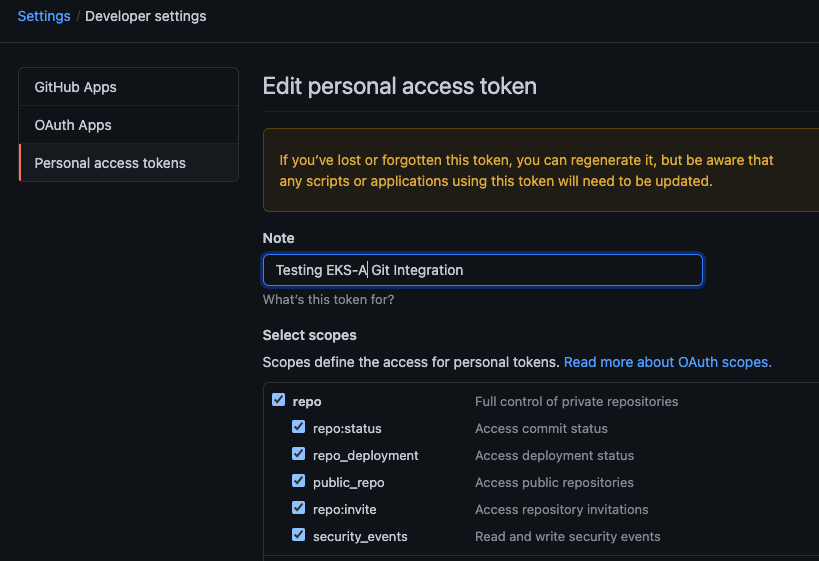

- 13: Manage cluster with GitOps

- 14: Manage cluster with Terraform

- 15: Reboot nodes

- 16: Cluster status

- 17: Delete cluster

- 18: Verify Cluster Images

1 - Overview

Once you have an EKS Anywhere cluster up and running, there are a number of operational tasks you may need to perform, such as changing cluster configuration, upgrading the cluster Kubernetes version, scaling the cluster, and setting up additional operational software such as ingress/load balancing tools, observability tools, and storage drivers.

Cluster Lifecycle Operations

The tools available for cluster lifecycle operations (create, update, upgrade, scale, delete) vary based on the EKS Anywhere architecture you run. You must use the eksctl CLI for cluster lifecycle operations with standalone clusters and management clusters. If you are running a management / workload cluster architecture, you can use the management cluster to manage one-to-many downstream workload clusters. With the management cluster architecture, you can use the eksctl CLI, any Kubernetes API-compatible client, or Infrastructure as Code (IAC) tooling such as Terraform and GitOps to manage the lifecycle of workload clusters. For details on the differences between the architecture options, reference the Architecture page

.

To perform cluster lifecycle operations for standalone, management, or workload clusters, you modify the EKS Anywhere Cluster specification, which is a Kubernetes Custom Resource for EKS Anywhere clusters. When you modify a field in an existing Cluster specification, EKS Anywhere reconciles the infrastructure and Kubernetes components until they match the new desired state you defined. These operations are asynchronous and you can validate the state of your cluster following the guidance on the Verify Cluster page

. To change a cluster configuration, update the field(s) in the Cluster specification and apply it to your standalone or management cluster. To upgrade the Kubernetes version, update the kubernetesVersion field in the Cluster specification and apply it to your standalone or management cluster. To scale the cluster, update the control plane or worker node count in the Cluster specification and apply it to the standalone or management cluster, or alternatively use Cluster Autoscaler to automate the scaling process. This operational model facilitates a declarative, intent-based pattern for defining and managing Kubernetes clusters that aligns with modern IAC and GitOps practices.

Networking

With EKS Anywhere, each virtual machine or bare metal server in the cluster gets an IP address via DHCP. The mechanics of this process vary for each provider (vSphere, bare metal, etc.) and it is handled automatically by EKS Anywhere during machine provisioning. During cluster creation, you must configure an IP address for the cluster’s control plane endpoint that is not in your DHCP address range but is reachable from your cluster’s subnet. This may require changes to your DHCP server to create an IP reservation. The IP address you specify for your cluster control plane endpoint is used as the virtual IP address for kube-vip , which EKS Anywhere uses to load balance traffic across the control plane nodes. You cannot change this IP address after cluster creation.

You additionally must configure CIDR blocks for Pods and Services in the Cluster specification during cluster creation. Each node in EKS Anywhere clusters receives an IP range subset from the Pod CIDR block, see Node IPs configuration for details. EKS Anywhere uses Cilium as the Container Networking Interface (CNI). The Cilium version in EKS Anywhere, “EKS Anywhere Cilium”, contains a subset of the open source Cilium capabilities. EKS Anywhere configures Cilium in Kubernetes host-scope IPAM mode , which delegates the address allocation to each individual node in the cluster. You can optionally replace the EKS Anywhere Cilium installation with a different CNI after cluster creation. Reference the Custom CNI page for details.

Security

The Shared Responsibility Model for EKS Anywhere is different than other AWS services that run in AWS Cloud, and it is your responsibility for the ongoing security of EKS Anywhere clusters you operate. Be sure to review and follow the EKS Anywhere Security Best Practices .

Support

EKS Anywhere is open source and free to use at no cost. To receive support for your EKS Anywhere clusters, you can optionally purchase EKS Anywhere Enterprise Subscriptions to get 24/7 support from AWS subject matter experts and access to EKS Anywhere Curated Packages . See the License cluster page for information on how to apply a license to your EKS Anywhere clusters, and the Generate Support Bundle page for details on how to create a support bundle that contains diagnostics that AWS uses to troubleshoot and resolve issues.

Ingress & Load Balancing

Most Kubernetes-conformant Ingress and Load Balancing options can be used with EKS Anywhere. EKS Anywhere includes Emissary Ingress and MetalLB as EKS Anywhere Curated Packages. For more information on EKS Anywhere Curated Packages, reference the Package Management Overview .

Observability

Most Kubernetes-conformant observability tools can be used with EKS Anywhere. You can optionally use the EKS Connector to view your EKS Anywhere cluster resources in the Amazon EKS console, reference the Connect to console page for details. EKS Anywhere includes the AWS Distro for Open Telemetry (ADOT) and Prometheus for metrics and tracing as EKS Anywhere Curated Packages. You can use popular tooling such as Fluent Bit for logging, and can track the progress of logging for ADOT on the AWS Observability roadmap . For more information on EKS Anywhere Curated Packages, reference the Package Management Overview .

Storage

Most Kubernetes-conformant storage options can be used with EKS Anywhere. For example, you can use the vSphere CSI driver

with EKS Anywhere clusters on vSphere. As of the v0.17.0 EKS Anywhere release, EKS Anywhere no longer installs or manages the vSphere CSI driver automatically, and you must self-manage this add-on. Other popular storage options include Rook/Ceph

, OpenEBS,

, Portworx

, and Netapp Trident

. Please note, if you are using Bottlerocket with EKS Anywhere, Bottlerocket does not currently support iSCSI, see the Bottlerocket GitHub

for details.

2 - Upgrade cluster

2.1 - Upgrade Overview

Version upgrades in EKS Anywhere and Kubernetes are events that should be carefully planned, tested, and implemented. New EKS Anywhere and Kubernetes versions can introduce significant changes, and we recommend that you test the behavior of your applications against new EKS Anywhere and Kubernetes versions before you update your production clusters. Cluster backups should always be performed before initiating an upgrade. When initiating cluster version upgrades, new virtual or bare metal machines are provisioned and the machines on older versions are deprovisioned in a rolling fashion by default.

Unlike Amazon EKS, there are no automatic upgrades in EKS Anywhere and you have full control over when you upgrade. On the end of support date, you can still create new EKS Anywhere clusters with the unsupported Kubernetes version if the EKS Anywhere version you are using includes it. Any existing EKS Anywhere clusters with the unsupported Kubernetes version continue to function. As new Kubernetes versions become available in EKS Anywhere, we recommend that you proactively update your clusters to use the latest available Kubernetes version to remain on versions that receive CVE patches and bug fixes.

Reference the EKS Anywhere Changelog for information on fixes, features, and changes included in each EKS Anywhere release. For details on the EKS Anywhere version support policy, reference the Versioning page.

Upgrade Version Skew

There are a few dimensions of versioning to consider in your EKS Anywhere deployments:

- Management clusters to workload clusters: Management clusters can be at most 1 EKS Anywhere minor version greater than the EKS Anywhere version of workload clusters. Workload clusters cannot have an EKS Anywhere version greater than management clusters.

- Management components to cluster components: Management components can be at most 1 EKS Anywhere minor version greater than the EKS Anywhere version of cluster components.

- EKS Anywhere version upgrades: Skipping EKS Anywhere minor versions during upgrade is not supported (

v0.17.xtov0.19.x). We recommend you upgrade one EKS Anywhere minor version at a time (v0.17.xtov0.18.xtov0.19.x). - Kubernetes version upgrades: Skipping Kubernetes minor versions during upgrade is not supported (

v1.26.xtov1.28.x). You must upgrade one Kubernetes minor version at a time (v1.26.xtov1.27.xtov1.28.x). - Kubernetes control plane and worker nodes: As of Kubernetes v1.28, worker nodes can be up to 3 minor versions lower than the Kubernetes control plane minor version. In earlier Kubernetes versions, worker nodes could be up to 2 minor versions lower than the Kubernetes control plane minor version.

User Interfaces

EKS Anywhere versions for management and standalone clusters must be upgraded with the eksctl anywhere CLI. Kubernetes versions for management, standalone, and workload clusters, and EKS Anywhere versions for workload clusters can be upgraded with the eksctl anywhere CLI or with Kubernetes API-compatible clients such as the kubectl CLI, GitOps, or Terraform. For an overview of the differences between management, standalone, workload clusters, reference the Architecture page.

If you are using the eksctl anywhere CLI, there are eksctl anywhere upgrade plan cluster and eksctl anywhere upgrade cluster commands. The former shows the components and versions that will be upgraded. The latter runs the upgrade, first validating a set of preflight checks and then upgrading your cluster to match the updated spec.

If you are using an Kubernetes API-compatible client, you modify your workload cluster spec yaml and apply the modified yaml to your management cluster. The EKS Anywhere lifecycle controller, which runs on the management cluster, reconciles the desired changes on the workload cluster.

As of EKS Anywhere version v0.19.0, management components can be upgraded separately from cluster components. This is enables you to get the latest updates to the management components such as Cluster API controller, EKS Anywhere controller, and provider-specific controllers without impact to your workload clusters. Management components can only be upgraded with the eksctl anywhere CLI, which has new eksctl anywhere upgrade plan management-components and eksctl anywhere upgrade management-component commands. For more information, reference the Upgrade Management Components page.

Upgrading EKS Anywhere Versions

Each EKS Anywhere version includes all components required to create and manage EKS Anywhere clusters. For example, this includes:

- Administrative / CLI components (

eksctl anywhereCLI, image-builder, diagnostics-collector) - Management components (Cluster API controller, EKS Anywhere controller, provider-specific controllers)

- Cluster components (Kubernetes, Cilium)

You can find details about each EKS Anywhere releases in the EKS Anywhere release manifest. The release manifest contains references to the corresponding bundle manifest for each EKS Anywhere version. Within the bundle manifest, you will find the components included in a specific EKS Anywhere version. The images running in your deployment use the same URI values specified in the bundle manifest for that component. For example, see the bundle manifest

for EKS Anywhere version v0.18.7.

To upgrade the EKS Anywhere version of a management or standalone cluster, you install a new version of the eksctl anywhere CLI, change the eksaVersion field in your management or standalone cluster’s spec yaml, and then run the eksctl anywhere upgrade management-components -f cluster.yaml (as of EKS Anywhere version v0.19) or eksctl anywhere upgrade cluster -f cluster.yaml command. The eksctl anywhere upgrade cluster command upgrades both management and cluster components.

To upgrade the EKS Anywhere version of a workload cluster, you change the eksaVersion field in your workload cluster’s spec yaml, and apply the new workload cluster’s spec yaml to your management cluster using the eksctl anywhere CLI or with Kubernetes API-compatible clients.

Upgrading Kubernetes Versions

Each EKS Anywhere version supports at least 4 minor versions of Kubernetes. Kubernetes patch version increments are included in EKS Anywhere minor and patch releases. There are two places in the cluster spec where you can configure the Kubernetes version, Cluster.Spec.KubernetesVersion and Cluster.Spec.WorkerNodeGroupConfiguration[].KubernetesVersion. If only Cluster.Spec.KubernetesVersion is set, then that version will apply to both control plane and worker nodes. You can use Cluster.Spec.WorkerNodeGroupConfiguration[].KubernetesVersion to upgrade your worker nodes separately from control plane nodes.

The Cluster.Spec.WorkerNodeGroupConfiguration[].KubernetesVersion cannot be greater than Cluster.Spec.KubernetesVersion. In Kubernetes versions lower than v1.28.0, the Cluster.Spec.WorkerNodeGroupConfiguration[].KubernetesVersion can be at most 2 versions lower than the Cluster.Spec.KubernetesVersion. In Kubernetes versions v1.28.0 or greater, the Cluster.Spec.WorkerNodeGroupConfiguration[].KubernetesVersion can be at most 3 versions lower than the Cluster.Spec.KubernetesVersion.

Upgrade Controls

By default, when you upgrade EKS Anywhere or Kubernetes versions, nodes are upgraded one at a time in a rolling fashion. All control plane nodes are upgraded before worker nodes. To control the speed and behavior of rolling upgrades, you can use the upgradeRolloutStrategy.rollingUpdate.maxSurge and upgradeRolloutStrategy.rollingUpdate.maxUnavailable fields in the cluster spec (available on all providers as of EKS Anywhere version v0.19). The maxSurge setting controls how many new machines can be queued for provisioning simultaneously, and the maxUnavailable setting controls how many machines must remain available during upgrades. For more information on these controls, reference Advanced configuration

for vSphere, CloudStack, Nutanix, and Snow upgrades and Advanced configuration

for bare metal upgrades.

As of EKS Anywhere version v0.19.0, if you are running EKS Anywhere on bare metal, you can use the in-place rollout strategy to upgrade EKS Anywhere and Kubernetes versions, which upgrades the components on the same physical machines without requiring additional server capacity. In-place upgrades are not available for other providers.

2.2 - Upgrade Bare Metal cluster

Note

Upgrade overview information was moved to a dedicated Upgrade Overview page.

Considerations

- Only EKS Anywhere and Kubernetes version upgrades are supported for Bare Metal clusters. You cannot update other cluster configuration.

- Upgrades should never be run from ephemeral nodes (short-lived systems that spin up and down on a regular basis). If the EKS Anywhere version is lower than

v0.18.0and upgrade fails, you must not delete the KinD bootstrap cluster Docker container. During an upgrade, the bootstrap cluster contains critical EKS Anywhere components. If it is deleted after a failed upgrade, they cannot be recovered. - It is highly recommended to run the

eksctl anywhere upgrade clustercommand with the--no-timeoutsoption when the command is executed through automation. This prevents the CLI from timing out and enables cluster operators to fix issues preventing the upgrade from completing while the process is running. - In EKS Anywhere version

v0.15.0, we introduced the EKS Anywhere cluster lifecycle controller that runs on management clusters and manages workload clusters. The EKS Anywhere lifecycle controller enables you to use Kubernetes API-compatible clients such askubectl, GitOps, or Terraform for managing workload clusters. In this EKS Anywhere version, the EKS Anywhere cluster lifecycle controller rolls out new nodes in workload clusters when management clusters are upgraded. In EKS Anywhere versionv0.16.0, this behavior was changed such that management clusters can be upgraded separately from workload clusters. - When running workload cluster upgrades after upgrading a management cluster, a machine rollout may be triggered on workload clusters during the workload cluster upgrade, even if the changes to the workload cluster spec didn’t require one (for example scaling down a worker node group).

- Starting with EKS Anywhere

v0.18.0, theosImageURLmust include the Kubernetes minor version (Cluster.Spec.KubernetesVersionorCluster.Spec.WorkerNodeGroupConfiguration[].KubernetesVersionin the cluster spec). For example, if the Kubernetes version is 1.29, theosImageURLmust include 1.29, 1_29, 1-29 or 129. If you are upgrading Kubernetes versions, you must have a new OS image with your target Kubernetes version components. - If you are running EKS Anywhere in an airgapped environment, you must download the new artifacts and images prior to initiating the upgrade. Reference the Airgapped Upgrades page page for more information.

Upgrade Version Skew

There are a few dimensions of versioning to consider in your EKS Anywhere deployments:

- Management clusters to workload clusters: Management clusters can be at most 1 EKS Anywhere minor version greater than the EKS Anywhere version of workload clusters. Workload clusters cannot have an EKS Anywhere version greater than management clusters.

- Management components to cluster components: Management components can be at most 1 EKS Anywhere minor version greater than the EKS Anywhere version of cluster components.

- EKS Anywhere version upgrades: Skipping EKS Anywhere minor versions during upgrade is not supported (

v0.17.xtov0.19.x). We recommend you upgrade one EKS Anywhere minor version at a time (v0.17.xtov0.18.xtov0.19.x). - Kubernetes version upgrades: Skipping Kubernetes minor versions during upgrade is not supported (

v1.26.xtov1.28.x). You must upgrade one Kubernetes minor version at a time (v1.26.xtov1.27.xtov1.28.x). - Kubernetes control plane and worker nodes: As of Kubernetes v1.28, worker nodes can be up to 3 minor versions lower than the Kubernetes control plane minor version. In earlier Kubernetes versions, worker nodes could be up to 2 minor versions lower than the Kubernetes control plane minor version.

Prerequisites

EKS Anywhere upgrades on Bare Metal require at least one spare hardware server for control plane upgrade and one for each worker node group upgrade. During upgrade, the spare hardware server is provisioned with the new version and then an old server is deprovisioned. The deprovisioned server is then reprovisioned with the new version while another old server is deprovisioned. This happens one at a time until all the control plane components have been upgraded, followed by worker node upgrades.

Check upgrade components

Before you perform an upgrade, check the current and new versions of components that are ready to upgrade by typing:

eksctl anywhere upgrade plan cluster -f cluster.yaml

The output should appear similar to the following:

Checking new release availability...

NAME CURRENT VERSION NEXT VERSION

EKS-A Management v0.19.0-dev+build.20+a0037f0 v0.19.0-dev+build.26+3bc5008

cert-manager v1.13.2+129095a v1.13.2+bb56494

cluster-api v1.6.1+5efe087 v1.6.1+9cf3436

kubeadm v1.6.1+8ceb315 v1.6.1+82f1c0a

tinkerbell v0.4.0+cdde180 v0.4.0+e848206

kubeadm v1.6.1+6420e1c v1.6.1+2f0b35f

etcdadm-bootstrap v1.0.10+7094b99 v1.0.10+a3f0355

etcdadm-controller v1.0.17+0259550 v1.0.17+ba86997

To format the output in json, add -o json to the end of the command line.

Check hardware availability

Next, you must ensure you have enough available hardware for the rolling upgrade operation to function. This type of upgrade requires you to have one spare hardware server for control plane upgrade and one for each worker node group upgrade. Check prerequisites for more information. Available hardware could have been fed to the cluster as extra hardware during a prior create command, or could be fed to the cluster during the upgrade process by providing the hardware CSV file to the upgrade cluster command .

To check if you have enough available hardware for rolling upgrade, you can use the kubectl command below to check if there are hardware objects with the selector labels corresponding to the controlplane/worker node group and without the ownerName label.

kubectl get hardware -n eksa-system --show-labels

For example, if you want to perform upgrade on a cluster with one worker node group with selector label type=worker-group-1, then you must have an additional hardware object in your cluster with the label type=controlplane (for control plane upgrade) and one with type=worker-group-1 (for worker node group upgrade) that doesn’t have the ownerName label.

In the command shown below, eksa-worker2 matches the selector label and it doesn’t have the ownerName label. Thus, it can be used to perform rolling upgrade of worker-group-1. Similarly, eksa-controlplane-spare will be used for rolling upgrade of control plane.

kubectl get hardware -n eksa-system --show-labels

NAME STATE LABELS

eksa-controlplane type=controlplane,v1alpha1.tinkerbell.org/ownerName=abhnvp-control-plane-template-1656427179688-9rm5f,v1alpha1.tinkerbell.org/ownerNamespace=eksa-system

eksa-controlplane-spare type=controlplane

eksa-worker1 type=worker-group-1,v1alpha1.tinkerbell.org/ownerName=abhnvp-md-0-1656427179689-9fqnx,v1alpha1.tinkerbell.org/ownerNamespace=eksa-system

eksa-worker2 type=worker-group-1

If you don’t have any available hardware that match this requirement in the cluster, you can setup a new hardware CSV . You can feed this hardware inventory file during the upgrade cluster command .

Performing a cluster upgrade

To perform a cluster upgrade you can modify your cluster specification kubernetesVersion field to the desired version.

As an example, to upgrade a cluster with version 1.24 to 1.25 you would change your spec as follows:

apiVersion: anywhere.eks.amazonaws.com/v1alpha1

kind: Cluster

metadata:

name: dev

spec:

controlPlaneConfiguration:

count: 1

endpoint:

host: "198.18.99.49"

machineGroupRef:

kind: TinkerbellMachineConfig

name: dev

...

kubernetesVersion: "1.25"

...

NOTE: If you have a custom machine image for your nodes in your cluster config yaml or to upgrade a node or group of nodes to a new operating system version (ie. RHEL 8.7 to RHEL 8.8), you may also need to update your

TinkerbellDatacenterConfigorTinkerbellMachineConfigwith the new operating system image URLosImageURL.

and then you will run the upgrade cluster command .

Upgrade cluster command

-

kubectl CLI: The cluster lifecycle feature lets you use kubectl to talk to the Kubernetes API to upgrade a workload cluster. To use kubectl, run:

kubectl apply -f eksa-w01-cluster.yaml --kubeconfig mgmt/mgmt-eks-a-cluster.kubeconfigTo check the state of a cluster managed with the cluster lifecyle feature, use

kubectlto show the cluster object with its status.The

statusfield on the cluster object field holds information about the current state of the cluster.kubectl get clusters w01 -o yamlThe cluster has been fully upgraded once the status of the

Readycondition is markedTrue. See the cluster status guide for more information. -

GitOps: See Manage separate workload clusters with GitOps

-

Terraform: See Manage separate workload clusters with Terraform

NOTE:For kubectl, GitOps and Terraform:

-

The baremetal controller does not support scaling upgrades and Kubernetes version upgrades in the same request.

-

While scaling a workload cluster if you need to add additional machines, run:

eksctl anywhere generate hardware -z updated-hardware.csv > updated-hardware.yaml kubectl apply -f updated-hardware.yaml -

If you want to upgrade multiple workload clusters, make sure that the spare hardware that is available for new nodes to rollout has labels unique to the workload cluster you are trying to upgrade. For instance, for an EKSA cluster named

eksa-workload1, the hardware that is assigned for this cluster should have labels that are only going to be used for this cluster liketype=eksa-workload1-cpandtype=eksa-workload1-worker. Another workload cluster namedeksa-workload2can have labels liketype=eksa-workload2-cpandtype=eksa-workload2-worker. Please note that even though labels can be arbitrary, they need to be unique for each workload cluster. Not specifying unique cluster labels can cause cluster upgrades to behave in unexpected ways which may lead to unsuccessful upgrades and unstable clusters.

-

-

eksctl CLI: To upgrade a workload cluster with eksctl, run:

eksctl anywhere upgrade cluster -f cluster.yaml # --hardware-csv <hardware.csv> \ # uncomment to add more hardware --kubeconfig mgmt/mgmt-eks-a-cluster.kubeconfigAs noted earlier, adding the

--kubeconfigoption tellseksctlto use the management cluster identified by that kubeconfig file to create a different workload cluster.This will upgrade the cluster specification (if specified), upgrade the core components to the latest available versions and apply the changes using the provisioner controllers.

Output

Example output:

✅ control plane ready ✅ worker nodes ready ✅ nodes ready ✅ cluster CRDs ready ✅ cluster object present on workload cluster ✅ upgrade cluster kubernetes version increment ✅ validate immutable fields 🎉 all cluster upgrade preflight validations passed Performing provider setup and validations Ensuring etcd CAPI providers exist on management cluster before upgrade Pausing GitOps cluster resources reconcile Upgrading core components Backing up management cluster's resources before upgrading Upgrading management cluster Updating Git Repo with new EKS-A cluster spec Forcing reconcile Git repo with latest commit Resuming GitOps cluster resources kustomization Writing cluster config file 🎉 Cluster upgraded! Cleaning up backup resourcesStarting in EKS Anywhere v0.18.0, when upgrading management cluster the CLI depends on the EKS Anywhere Controller to perform the upgrade. In the event an issue occurs and the CLI times out, it may be possible to fix the issue and have the upgrade complete as the EKS Anywhere Controller will continually attempt to complete the upgrade.

During the workload cluster upgrade process, EKS Anywhere pauses the cluster controller reconciliation by adding the paused annotation

anywhere.eks.amazonaws.com/paused: trueto the EKS Anywhere cluster, provider datacenterconfig and machineconfig resources, before the components upgrade. After upgrade completes, the annotations are removed so that the cluster controller resumes reconciling the cluster. If the CLI execution is interrupted or times out, the controller won’t reconcile changes to the EKS-A objects until these annotations are removed. You can re-run the CLI to restart the upgrade process or remove the annotations manually withkubectl.Though not recommended, you can manually pause the EKS Anywhere cluster controller reconciliation to perform extended maintenance work or interact with Cluster API objects directly. To do it, you can add the paused annotation to the cluster resource:

kubectl annotate clusters.anywhere.eks.amazonaws.com ${CLUSTER_NAME} -n ${CLUSTER_NAMESPACE} anywhere.eks.amazonaws.com/paused=trueAfter finishing the task, make sure you resume the cluster reconciliation by removing the paused annotation, so that EKS Anywhere cluster controller can continue working as expected.

kubectl annotate clusters.anywhere.eks.amazonaws.com ${CLUSTER_NAME} -n ${CLUSTER_NAMESPACE} anywhere.eks.amazonaws.com/paused-

Upgradeable cluster attributes

Cluster:

kubernetesVersioncontrolPlaneConfiguration.countcontrolPlaneConfiguration.upgradeRolloutStrategy.rollingUpdate.maxSurgeworkerNodeGroupConfigurations.countworkerNodeGroupConfigurations.kubernetesVersion(in case of modular upgrade)workerNodeGroupConfigurations.upgradeRolloutStrategy.rollingUpdate.maxSurgeworkerNodeGroupConfigurations.upgradeRolloutStrategy.rollingUpdate.maxUnavailable

TinkerbellDatacenterConfig:

osImageURL

Advanced configuration for upgrade rollout strategy

EKS Anywhere allows an optional configuration to customize the behavior of upgrades.

upgradeRolloutStrategy can be configured separately for control plane and for each worker node group.

This template contains an example for control plane under the controlPlaneConfiguration section and for worker node group under workerNodeGroupConfigurations:

apiVersion: anywhere.eks.amazonaws.com/v1alpha1

kind: Cluster

metadata:

name: my-cluster-name

spec:

clusterNetwork:

cniConfig:

cilium: {}

pods:

cidrBlocks:

- 192.168.0.0/16

services:

cidrBlocks:

- 10.96.0.0/12

controlPlaneConfiguration:

count: 1

endpoint:

host: "10.61.248.209"

machineGroupRef:

kind: TinkerbellMachineConfig

name: my-cluster-name-cp

upgradeRolloutStrategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 1

datacenterRef:

kind: TinkerbellDatacenterConfig

name: my-cluster-name

kubernetesVersion: "1.25"

managementCluster:

name: my-cluster-name

workerNodeGroupConfigurations:

- count: 2

machineGroupRef:

kind: TinkerbellMachineConfig

name: my-cluster-name

name: md-0

upgradeRolloutStrategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 1

maxUnavailable: 0

---

...

upgradeRolloutStrategy

Configuration parameters for upgrade strategy.

upgradeRolloutStrategy.type

Default: RollingUpdate

Type of rollout strategy. Supported values: RollingUpdate,InPlace.

NOTE: The upgrade rollout strategy type must be the same for all control plane and worker nodes.

upgradeRolloutStrategy.rollingUpdate

Configuration parameters for customizing rolling upgrade behavior.

NOTE: The rolling update parameters can only be configured if

upgradeRolloutStrategy.typeisRollingUpdate.

upgradeRolloutStrategy.rollingUpdate.maxSurge

Default: 1

This can not be 0 if maxUnavailable is 0.

The maximum number of machines that can be scheduled above the desired number of machines.

Example: When this is set to n, the new worker node group can be scaled up immediately by n when the rolling upgrade starts. Total number of machines in the cluster (old + new) never exceeds (desired number of machines + n). Once scale down happens and old machines are brought down, the new worker node group can be scaled up further ensuring that the total number of machines running at any time does not exceed the desired number of machines + n.

upgradeRolloutStrategy.rollingUpdate.maxUnavailable

Default: 0

This can not be 0 if MaxSurge is 0.

The maximum number of machines that can be unavailable during the upgrade.

This can only be configured for worker nodes.

Example: When this is set to n, the old worker node group can be scaled down by n machines immediately when the rolling upgrade starts. Once new machines are ready, old worker node group can be scaled down further, followed by scaling up the new worker node group, ensuring that the total number of machines unavailable at all times during the upgrade never falls below n.

Rolling Upgrades

The RollingUpdate rollout strategy type allows the specification of two parameters that control the desired behavior of rolling upgrades:

maxSurge- The maximum number of machines that can be scheduled above the desired number of machines. When not specified, the current CAPI default of 1 is used.maxUnavailable- The maximum number of machines that can be unavailable during the upgrade. When not specified, the current CAPI default of 0 is used.

Example configuration:

upgradeRolloutStrategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 1

maxUnavailable: 0 # only configurable for worker nodes

Rolling upgrades with no additional hardware

When maxSurge is set to 0 and maxUnavailable is set to 1, it allows for a rolling upgrade without need for additional hardware. Use this configuration if your workloads can tolerate node unavailability.

NOTE: This could ONLY be used if unavailability of a maximum of 1 node is acceptable. For single node clusters, an additional temporary machine is a must. Alternatively, you may recreate the single node cluster for upgrading and handle data recovery manually.

With this kind of configuration, the rolling upgrade will proceed node by node, deprovision and delete a node fully before re-provisioning it with upgraded version, and re-join it to the cluster. This means that any point during the course of the rolling upgrade, there could be one unavailable node.

In-Place Upgrades

As of EKS Anywhere version v0.19.0, the InPlace rollout strategy type can be used to upgrade the EKS Anywhere and Kubernetes versions by upgrading the components on the same physical machines without requiring additional server capacity.

EKS Anywhere schedules a privileged pod that executes the upgrade logic as a sequence of init containers on each node to be upgraded.

This upgrade logic includes updating the containerd, cri-tools, kubeadm, kubectl and kubelet binaries along with core Kubernetes components and restarting those services.

Due to the nature of this upgrade, temporary downtime of workloads can be expected. It is best practice to configure your clusters in a way that they are resilient to having one node down.

During in place upgrades, EKS Anywhere pauses machine health checks to ensure that new nodes are not rolled out while the node is temporarily down during the upgrade process.

Moreover, autoscaler configuration is not supported when using InPlace upgrade rollout strategy to further ensure that no new nodes are rolled out unexpectedly.

Example configuration:

upgradeRolloutStrategy:

type: InPlace

Troubleshooting

Attempting to upgrade a cluster with more than 1 minor release will result in receiving the following error.

✅ validate immutable fields

❌ validation failed {"validation": "Upgrade preflight validations", "error": "validation failed with 1 errors: WARNING: version difference between upgrade version (1.21) and server version (1.19) do not meet the supported version increment of +1", "remediation": ""}

Error: failed to upgrade cluster: validations failed

For more errors you can see the troubleshooting section .

2.3 - Upgrade vSphere, CloudStack, Nutanix, or Snow cluster

Note

Upgrade overview information was moved to a dedicated Upgrade Overview page.

Considerations

- Upgrades should never be run from ephemeral nodes (short-lived systems that spin up and down on a regular basis). If the EKS Anywhere version is lower than

v0.18.0and upgrade fails, you must not delete the KinD bootstrap cluster Docker container. During an upgrade, the bootstrap cluster contains critical EKS Anywhere components. If it is deleted after a failed upgrade, they cannot be recovered. - It is highly recommended to run the

eksctl anywhere upgrade clustercommand with the--no-timeoutsoption when the command is executed through automation. This prevents the CLI from timing out and enables cluster operators to fix issues preventing the upgrade from completing while the process is running. - In EKS Anywhere version

v0.13.0, we introduced the EKS Anywhere cluster lifecycle controller that runs on management clusters and manages workload clusters. The EKS Anywhere lifecycle controller enables you to use Kubernetes API-compatible clients such askubectl, GitOps, or Terraform for managing workload clusters. In this EKS Anywhere version, the EKS Anywhere cluster lifecycle controller rolls out new nodes in workload clusters when management clusters are upgraded. In EKS Anywhere versionv0.16.0, this behavior was changed such that management clusters can be upgraded separately from workload clusters. - When running workload cluster upgrades after upgrading a management cluster, a machine rollout may be triggered on workload clusters during the workload cluster upgrade, even if the changes to the workload cluster spec didn’t require one (for example scaling down a worker node group).

- Starting with EKS Anywhere

v0.18.0, theimage/templatemust include the Kubernetes minor version (Cluster.Spec.KubernetesVersionorCluster.Spec.WorkerNodeGroupConfiguration[].KubernetesVersionin the cluster spec). For example, if the Kubernetes version is 1.24, theimage/templatemust include 1.24, 1_24, 1-24 or 124. If you are upgrading Kubernetes versions, you must have a new image with your target Kubernetes version components. - If you are running EKS Anywhere on Snow, a new Admin instance is needed when upgrading to new versions of EKS Anywhere. See Upgrade EKS Anywhere AMIs in Snowball Edge devices to upgrade and use a new Admin instance in Snow devices.

- If you are running EKS Anywhere in an airgapped environment, you must download the new artifacts and images prior to initiating the upgrade. Reference the Airgapped Upgrades page page for more information.

Upgrade Version Skew

There are a few dimensions of versioning to consider in your EKS Anywhere deployments:

- Management clusters to workload clusters: Management clusters can be at most 1 EKS Anywhere minor version greater than the EKS Anywhere version of workload clusters. Workload clusters cannot have an EKS Anywhere version greater than management clusters.

- Management components to cluster components: Management components can be at most 1 EKS Anywhere minor version greater than the EKS Anywhere version of cluster components.

- EKS Anywhere version upgrades: Skipping EKS Anywhere minor versions during upgrade is not supported (

v0.17.xtov0.19.x). We recommend you upgrade one EKS Anywhere minor version at a time (v0.17.xtov0.18.xtov0.19.x). - Kubernetes version upgrades: Skipping Kubernetes minor versions during upgrade is not supported (

v1.26.xtov1.28.x). You must upgrade one Kubernetes minor version at a time (v1.26.xtov1.27.xtov1.28.x). - Kubernetes control plane and worker nodes: As of Kubernetes v1.28, worker nodes can be up to 3 minor versions lower than the Kubernetes control plane minor version. In earlier Kubernetes versions, worker nodes could be up to 2 minor versions lower than the Kubernetes control plane minor version.

Prepare DHCP IP addresses pool

Please make sure to have sufficient available IP addresses in your DHCP pool to cover the new machines. The number of necessary IPs can be calculated from the machine counts and maxSurge config . For create operation, each machine needs 1 IP. For upgrade operation, control plane and workers need just 1 extra IP (total, not per node) due to rolling upgrade strategy. Each external etcd machine needs 1 extra IP address (ex: 3 etcd nodes would require 3 more IP addresses) because EKS Anywhere needs to create all the new etcd machines before removing any old ones. You will also need additional IPs to be equal to the number used for maxSurge. After calculating the required IPs, please make sure your environment has enough available IPs before performing the upgrade operation.

- Example 1, to create a cluster with 3 control plane node, 2 worker nodes and 3 stacked etcd, you will need at least 5 (3+2+0, as stacked etcd is deployed as part of the control plane nodes) available IPs. To upgrade the same cluster with default maxSurge (0), you will need 1 (1+0+0) additional available IPs.

- Example 2, to create a cluster with 1 control plane node, 2 worker nodes and 3 unstacked (external) etcd nodes, you will need at least 6 (1+2+3) available IPs. To upgrade the same cluster with default maxSurge (0), you will need at least 4 (1+3+0) additional available IPs.

- Example 3, to upgrade a cluster with 1 control plane node, 2 worker nodes and 3 unstacked (external) etcd nodes, with maxSurge set to 2, you will need at least 6 (1+3+2) additional available IPs.

Check upgrade components

Before you perform an upgrade, check the current and new versions of components that are ready to upgrade by typing:

Management Cluster

eksctl anywhere upgrade plan cluster -f mgmt-cluster.yaml

Workload Cluster

eksctl anywhere upgrade plan cluster -f workload-cluster.yaml --kubeconfig mgmt/mgmt-eks-a-cluster.kubeconfig

The output should appear similar to the following:

Checking new release availability...

NAME CURRENT VERSION NEXT VERSION

EKS-A Management v0.19.0-dev+build.170+6a04c21 v0.19.0-dev+build.225+c137128

cert-manager v1.13.2+a34c207 v1.14.2+c0da11a

cluster-api v1.6.1+9bf197f v1.6.2+f120729

kubeadm v1.6.1+2c7274d v1.6.2+8091cf6

vsphere v1.8.5+205ebc5 v1.8.5+65d2d66

kubeadm v1.6.1+46e4754 v1.6.2+44d7c68

etcdadm-bootstrap v1.0.10+43a3235 v1.0.10+e5e6ac4

etcdadm-controller v1.0.17+fc882de v1.0.17+3d9ebdc

To the format output in json, add -o json to the end of the command line.

Performing a cluster upgrade

To perform a cluster upgrade you can modify your cluster specification kubernetesVersion field to the desired version.

As an example, to upgrade a cluster with version 1.26 to 1.27 you would change your spec

apiVersion: anywhere.eks.amazonaws.com/v1alpha1

kind: Cluster

metadata:

name: dev

spec:

controlPlaneConfiguration:

count: 1

endpoint:

host: "198.18.99.49"

machineGroupRef:

kind: VSphereMachineConfig

name: dev

...

kubernetesVersion: "1.27"

...

NOTE: If you have a custom machine image for your nodes you may also need to update your

vsphereMachineConfigwith a newtemplate. Refer to vSphere Artifacts to build a new OVA template.

and then you will run the upgrade cluster command .

Upgrade cluster command

-

kubectl CLI: The cluster lifecycle feature lets you use

kubectlto talk to the Kubernetes API to upgrade an EKS Anywhere cluster. For example, to usekubectlto upgrade a management or workload cluster, you can run:# Upgrade a management cluster with cluster name "mgmt" kubectl apply -f mgmt-cluster.yaml --kubeconfig mgmt/mgmt-eks-a-cluster.kubeconfig # Upgrade a workload cluster with cluster name "eksa-w01" kubectl apply -f eksa-w01-cluster.yaml --kubeconfig mgmt/mgmt-eks-a-cluster.kubeconfigTo check the state of a cluster managed with the cluster lifecyle feature, use

kubectlto show the cluster object with its status.The

statusfield on the cluster object field holds information about the current state of the cluster.kubectl get clusters w01 -o yamlThe cluster has been fully upgraded once the status of the

Readycondition is markedTrue. See the cluster status guide for more information. -

GitOps: See Manage separate workload clusters with GitOps

-

Terraform: See Manage separate workload clusters with Terraform

Important

For kubectl, GitOps and Terraform

If you want to update the registry mirror

credential with kubectl, GitOps or Terraform, you need to update the registry-credentials secret in the eksa-system namespace of your management cluster. For example with kubectl, you can run:

kubectl edit secret -n eksa-system registry-credentials --kubeconfig mgmt/mgmt-eks-a-cluster.kubeconfig

Replace username and password fields with the base64-encoded values of your new username and password. You can encode the values using the echo command, for example:

echo -n 'newusername' | base64

echo -n 'newpassword' | base64

-

eksctl CLI: To upgrade an EKS Anywhere cluster with

eksctl, run:# Upgrade a management cluster with cluster name "mgmt" eksctl anywhere upgrade cluster -f mgmt-cluster.yaml # Upgrade a workload cluster with cluster name "eksa-w01" eksctl anywhere upgrade cluster -f eksa-w01-cluster.yaml --kubeconfig mgmt/mgmt-eks-a-cluster.kubeconfigAs noted earlier, adding the

--kubeconfigoption tellseksctlto use the management cluster identified by that kubeconfig file to upgrade a different workload cluster.This will upgrade the cluster specification (if specified), upgrade the core components to the latest available versions and apply the changes using the provisioner controllers.

Output

Example output:

✅ control plane ready

✅ worker nodes ready

✅ nodes ready

✅ cluster CRDs ready

✅ cluster object present on workload cluster

✅ upgrade cluster kubernetes version increment

✅ validate immutable fields

🎉 all cluster upgrade preflight validations passed

Performing provider setup and validations

Ensuring etcd CAPI providers exist on management cluster before upgrade

Pausing GitOps cluster resources reconcile

Upgrading core components

Backing up management cluster's resources before upgrading

Upgrading management cluster

Updating Git Repo with new EKS-A cluster spec

Forcing reconcile Git repo with latest commit

Resuming GitOps cluster resources kustomization

Writing cluster config file

🎉 Cluster upgraded!

Cleaning up backup resources

Starting in EKS Anywhere v0.18.0, when upgrading management cluster the CLI depends on the EKS Anywhere Controller to perform the upgrade. In the event an issue occurs and the CLI times out, it may be possible to fix the issue and have the upgrade complete as the EKS Anywhere Controller will continually attempt to complete the upgrade.

During the workload cluster upgrade process, EKS Anywhere pauses the cluster controller reconciliation by adding the paused annotation anywhere.eks.amazonaws.com/paused: true to the EKS Anywhere cluster, provider datacenterconfig and machineconfig resources, before the components upgrade. After upgrade completes, the annotations are removed so that the cluster controller resumes reconciling the cluster. If the CLI execution is interrupted or times out, the controller won’t reconcile changes to the EKS-A objects until these annotations are removed. You can re-run the CLI to restart the upgrade process or remove the annotations manually with kubectl.

Though not recommended, you can manually pause the EKS Anywhere cluster controller reconciliation to perform extended maintenance work or interact with Cluster API objects directly. To do it, you can add the paused annotation to the cluster resource:

kubectl annotate clusters.anywhere.eks.amazonaws.com ${CLUSTER_NAME} -n ${CLUSTER_NAMESPACE} anywhere.eks.amazonaws.com/paused=true

After finishing the task, make sure you resume the cluster reconciliation by removing the paused annotation, so that EKS Anywhere cluster controller can continue working as expected.

kubectl annotate clusters.anywhere.eks.amazonaws.com ${CLUSTER_NAME} -n ${CLUSTER_NAMESPACE} anywhere.eks.amazonaws.com/paused-

NOTE (vSphere only): If you are upgrading a vSphere cluster created using EKS Anywhere version prior to

v0.16.0that has the vSphere CSI Driver installed in it, please refer to the additional steps listed here before attempting an upgrade.

Upgradeable Cluster Attributes

EKS Anywhere upgrade supports upgrading more than just the kubernetesVersion,

allowing you to upgrade a number of fields simultaneously with the same procedure.

Upgradeable Attributes

Cluster:

kubernetesVersioncontrolPlaneConfiguration.countcontrolPlaneConfiguration.machineGroupRef.namecontrolPlaneConfiguration.upgradeRolloutStrategy.rollingUpdate.maxSurgeworkerNodeGroupConfigurations.countworkerNodeGroupConfigurations.machineGroupRef.nameworkerNodeGroupConfigurations.kubernetesVersion(in case of modular upgrade)workerNodeGroupConfigurations.upgradeRolloutStrategy.rollingUpdate.maxSurgeworkerNodeGroupConfigurations.upgradeRolloutStrategy.rollingUpdate.maxUnavailableexternalEtcdConfiguration.machineGroupRef.nameidentityProviderRefs(Only forkind:OIDCConfig,kind:AWSIamConfigis immutable)gitOpsRef(Once set, you can’t change or delete the field’s content later)registryMirrorConfiguration(for non-authenticated registry mirror)endpointportcaCertContentinsecureSkipVerify

VSphereMachineConfig:

datastorediskGiBfoldermemoryMiBnumCPUsresourcePooltemplateusers

NutanixMachineConfig:

vcpusPerSocketvcpuSocketsmemorySizeimageclustersubnetsystemDiskSize

SnowMachineConfig:

amiIDinstanceTypephysicalNetworkConnectorsshKeyNamedevicescontainersVolumeosFamilynetwork

CloudStackDatacenterConfig:

availabilityZones(Can add and remove availability zones provided at least 1 previously configured zone is still present)

CloudStackMachineConfig:

templatecomputeOfferingdiskOfferinguserCustomDetailssymlinksusers

OIDCConfig:

clientIDgroupsClaimgroupsPrefixissuerUrlrequiredClaims.claimrequiredClaims.valueusernameClaimusernamePrefix

AWSIamConfig:

mapRolesmapUsers

EKS Anywhere upgrade also supports adding more worker node groups post-creation.

To add more worker node groups, modify your cluster config file to define the additional group(s).

Example:

apiVersion: anywhere.eks.amazonaws.com/v1alpha1

kind: Cluster

metadata:

name: dev

spec:

controlPlaneConfiguration:

...

workerNodeGroupConfigurations:

- count: 2

machineGroupRef:

kind: VSphereMachineConfig

name: my-cluster-machines

name: md-0

- count: 2

machineGroupRef:

kind: VSphereMachineConfig

name: my-cluster-machines

name: md-1

...

Worker node groups can use the same machineGroupRef as previous groups, or you can define a new machine configuration for your new group.

Advanced configuration for rolling upgrade

EKS Anywhere allows an optional configuration to customize the behavior of upgrades.

It allows the specification of Two parameters that control the desired behavior of rolling upgrades:

- maxSurge - The maximum number of machines that can be scheduled above the desired number of machines. When not specified, the current CAPI default of 1 is used.

- maxUnavailable - The maximum number of machines that can be unavailable during the upgrade. When not specified, the current CAPI default of 0 is used.

Example configuration:

upgradeRolloutStrategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 1

maxUnavailable: 0 # only configurable for worker nodes

‘upgradeRolloutStrategy’ configuration can be specified separately for control plane and for each worker node group. This template contains an example for control plane under the ‘controlPlaneConfiguration’ section and for worker node group under ‘workerNodeGroupConfigurations’:

apiVersion: anywhere.eks.amazonaws.com/v1alpha1

kind: Cluster

metadata:

name: my-cluster-name

spec:

controlPlaneConfiguration:

count: 1

endpoint:

host: "xx.xx.xx.xx"

machineGroupRef:

kind: VSphereMachineConfig

name: my-cluster-name-cp

upgradeRolloutStrategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 1

workerNodeGroupConfigurations:

- count: 2

machineGroupRef:

kind: VSphereMachineConfig

name: my-cluster-name

name: md-0

upgradeRolloutStrategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 1

maxUnavailable: 0

---

...

upgradeRolloutStrategy

Configuration parameters for upgrade strategy.

upgradeRolloutStrategy.type

Type of rollout strategy. Currently only RollingUpdate is supported.

upgradeRolloutStrategy.rollingUpdate

Configuration parameters for customizing rolling upgrade behavior.

upgradeRolloutStrategy.rollingUpdate.maxSurge

Default: 1

This can not be 0 if maxUnavailable is 0.

The maximum number of machines that can be scheduled above the desired number of machines.

Example: When this is set to n, the new worker node group can be scaled up immediately by n when the rolling upgrade starts. Total number of machines in the cluster (old + new) never exceeds (desired number of machines + n). Once scale down happens and old machines are brought down, the new worker node group can be scaled up further ensuring that the total number of machines running at any time does not exceed the desired number of machines + n.

upgradeRolloutStrategy.rollingUpdate.maxUnavailable

Default: 0

This can not be 0 if MaxSurge is 0.

The maximum number of machines that can be unavailable during the upgrade.

Example: When this is set to n, the old worker node group can be scaled down by n machines immediately when the rolling upgrade starts. Once new machines are ready, old worker node group can be scaled down further, followed by scaling up the new worker node group, ensuring that the total number of machines unavailable at all times during the upgrade never falls below n.

Resume upgrade after failure

EKS Anywhere supports re-running the upgrade command post-failure as an experimental feature.

If the upgrade command fails, the user can manually fix the issue (when applicable) and simply rerun the same command. At this point, the CLI will skip the completed tasks, restore the state of the operation, and resume the upgrade process.

The completed tasks are stored in the generated folder as a file named <clusterName>-checkpoint.yaml.

This feature is experimental. To enable this feature, export the following environment variable:

export CHECKPOINT_ENABLED=true

Update vSphere credential

Currently EKS Anywhere does not support updating vSphere credential when upgrading cluster with eksctl anywhere upgrade command. There are two ways to perform a vsphere credentials update:

- Manually update all vSphere credentials in related Secret objects, follow the steps below:

- Update

EKSA_VSPHERE_PASSWORDenvironment variable to the new password and get the base64 encoded string of the password usingecho -n "<YOUR_PASSWORD>" | base64 - Update the following secrets in your vSphere cluster using

kubectl editcommand:{CLUSTER_NAME}-vsphere-credentialsundereksa-systemnamespace - Updatepasswordfield under data.{CLUSTER_NAME}-cloud-provider-vsphere-credentialsundereksa-systemnamespace - Decode the string under data, in the decoded string (which is the template for Secret objectcloud-provider-vsphere-credentialunderkube-systemnamespace), update the{CLUSTER_NAME}.passwordwith the base64 encoding of new password, then encode the string and update data field with the encoded string.vsphere-credentialsundereksa-systemnamespace - Updatepassword,passwordCP,passwordCSIfield under data.- If annotation

kubectl.kubernetes.io/last-applied-configurationexists on any of the above Secret object, update password inkubectl.kubernetes.io/last-applied-configurationfield. {CLUSTER_NAME}-csi-vsphere-configundereksa-systemnamespace - If annotationkubectl.kubernetes.io/last-applied-configurationexists on the secret object, update password inkubectl.kubernetes.io/last-applied-configurationfield.

- Only update Secret

vsphere-credentialsundereksa-systemnamespace then trigger a full EKS-A CAPI cluster upgrade by modifying the cluster spec:

- Update

EKSA_VSPHERE_PASSWORDenvironment variable to the new password and get the base64 encoded string of the password usingecho -n "<YOUR_PASSWORD>" | base64 - Update secret

vsphere-credentialsundereksa-systemnamespace - Updatepassword,passwordCP,passwordCSIfield under data and inkubectl.kubernetes.io/last-applied-configurationif annotation exists. - Modify any field in the cluster config file and then run

eksctl anywhere upgrade cluster -f <cluster-config-file>to trigger a full cluster upgrade. This will automatically apply the new credentials to all related secrets.

Troubleshooting

Attempting to upgrade a cluster with more than 1 minor release will result in receiving the following error.

✅ validate immutable fields

❌ validation failed {"validation": "Upgrade preflight validations", "error": "validation failed with 1 errors: WARNING: version difference between upgrade version (1.21) and server version (1.19) do not meet the supported version increment of +1", "remediation": ""}

Error: failed to upgrade cluster: validations failed

For more errors you can see the troubleshooting section .

2.4 - Upgrade airgapped cluster

The procedure to upgrade EKS Anywhere clusters in airgapped environments is similar to the procedure for creating new clusters in airgapped environments. The only difference is that you must upgrade your eksctl anywhere CLI before running the steps to download and import the EKS Anywhere dependencies to your local registry mirror.

Prerequisites

- An existing Admin machine

- The upgraded version of the

eksctl anywhereCLI installed on the Admin machine - Docker running on the Admin machine

- At least 80GB in storage space on the Admin machine to temporarily store the EKS Anywhere images locally before importing them to your local registry. Currently, when downloading images, EKS Anywhere pulls all dependencies for all infrastructure providers and supported Kubernetes versions.

- The download and import images commands must be run on an amd64 machine to import amd64 images to the registry mirror.

Procedure

-

Download the EKS Anywhere artifacts that contain the list and locations of the EKS Anywhere dependencies. A compressed file

eks-anywhere-downloads.tar.gzwill be downloaded. You can use theeksctl anywhere download artifacts --dry-runcommand to see the list of artifacts it will download.eksctl anywhere download artifacts -

Decompress the

eks-anywhere-downloads.tar.gzfile using the following command. This will create aneks-anywhere-downloadsfolder.tar -xvf eks-anywhere-downloads.tar.gz -

Download the EKS Anywhere image dependencies to the Admin machine. This command may take several minutes (10+) to complete. To monitor the progress of the command, you can run with the

-v 6command line argument, which will show details of the images that are being pulled. Docker must be running for the following command to succeed.eksctl anywhere download images -o images.tar -

Set up a local registry mirror to host the downloaded EKS Anywhere images and configure your Admin machine with the certificates and authentication information if your registry requires it. For details, refer to the Registry Mirror Configuration documentation.

-

Import images to the local registry mirror using the following command. Set

REGISTRY_MIRROR_URLto the url of the local registry mirror you created in the previous step. This command may take several minutes to complete. To monitor the progress of the command, you can run with the-v 6command line argument. When using self-signed certificates for your registry, you should run with the--insecurecommand line argument to indicate skipping TLS verification while pushing helm charts and bundles.export REGISTRY_MIRROR_URL=<registryurl>eksctl anywhere import images -i images.tar -r ${REGISTRY_MIRROR_URL} \ --bundles ./eks-anywhere-downloads/bundle-release.yaml -

Optionally import curated packages to your registry mirror. The curated packages images are copied from Amazon ECR to your local registry mirror in a single step, as opposed to separate download and import steps. Follow the Curated Packages documentation.

If the previous steps succeeded, all of the required EKS Anywhere dependencies are now present in your local registry. Before you upgrade your EKS Anywhere cluster, configure registryMirrorConfiguration in your EKS Anywhere cluster specification with the information for your local registry. For details see the Registry Mirror Configuration documentation.

NOTE: If you are running EKS Anywhere on bare metal, you must configure

osImageURLandhookImagesURLPathin your EKS Anywhere cluster specification with the location of the upgraded node operating system image and hook OS image. For details, reference the bare metal configuration documentation.

Next Steps

2.5 - Upgrade management components

Note

Theeksctl anywhere upgrade management-components subcommand was added in EKS Anywhere version v0.19.0 for all providers. Management component upgrades can only be done through the eksctl CLI, not through the Kubernetes API.

What are management components?

Management components run on management or standalone clusters and are responsible for managing the lifecycle of workload clusters. Management components include but are not limited to:

- Cluster API controller

- EKS Anywhere cluster lifecycle controller

- Curated Packages controller

- Provider-specific controllers (vSphere, Tinkerbell etc.)

- Tinkerbell services (Boots, Hegel, Rufio, etc.)

- Custom Resource Definitions (CRDs) (clusters, eksareleases, etc.)

Why upgrade management components separately?

The existing eksctl anywhere upgrade cluster command, when run against management or standalone clusters, upgrades both the management and cluster components. When upgrading versions, this upgrade process performs a rolling replacement of nodes in the cluster, which brings operational complexity, and should be carefully planned and executed.

With the new eksctl anywhere upgrade management-components command, you can upgrade management components separately from cluster components. This enables you to get the latest updates to the management components such as Cluster API controller, EKS Anywhere controller, and provider-specific controllers without a rolling replacement of nodes in the cluster, which reduces the operational complexity of the operation.

Check management components versions

You can check the current and new versions of management components with the eksctl anywhere upgrade plan management-components command:

eksctl anywhere upgrade plan management-components -f management-cluster.yaml

The output should appear similar to the following:

NAME CURRENT VERSION NEXT VERSION

EKS-A Management v0.18.3+cc70180 v0.19.0+a672f31

cert-manager v1.13.0+68bec33 v1.13.2+a34c207

cluster-api v1.5.2+b14378d v1.6.0+04c07bc

kubeadm v1.5.2+5762149 v1.6.0+5bf0931

vsphere v1.7.4+6ecf386 v1.8.5+650acfa

etcdadm-bootstrap v1.0.10+c9a5a8a v1.0.10+1ceb898

etcdadm-controller v1.0.16+0ed68e6 v1.0.17+5e33062

Alternatively, you can run the eksctl anywhere upgrade plan cluster command against your management cluster, which shows the version differences for both management and cluster components.

Upgrade management components

To perform the management components upgrade, run the following command:

eksctl anywhere upgrade management-components -f management-cluster.yaml

The output should appear similar to the following:

Performing setup and validations

✅ Docker provider validation

✅ Control plane ready

✅ Cluster CRDs ready

Upgrading core components

Installing new eksa components

🎉 Management components upgraded!

At this point, a new eksarelease custom resource will be available in your management cluster, which means new cluster components that correspond to your current EKS Anywhere version are available for cluster upgrades. You can subsequently run a workload cluster upgrade with the eksctl anywhere upgrade cluster command, or by updating eksaVersion field in your workload cluster’s spec and applying it to your management cluster with Kubernetes API-compatible tooling such as kubectl, GitOps, or Terraform.

2.6 -

There are a few dimensions of versioning to consider in your EKS Anywhere deployments:

- Management clusters to workload clusters: Management clusters can be at most 1 EKS Anywhere minor version greater than the EKS Anywhere version of workload clusters. Workload clusters cannot have an EKS Anywhere version greater than management clusters.

- Management components to cluster components: Management components can be at most 1 EKS Anywhere minor version greater than the EKS Anywhere version of cluster components.

- EKS Anywhere version upgrades: Skipping EKS Anywhere minor versions during upgrade is not supported (

v0.17.xtov0.19.x). We recommend you upgrade one EKS Anywhere minor version at a time (v0.17.xtov0.18.xtov0.19.x). - Kubernetes version upgrades: Skipping Kubernetes minor versions during upgrade is not supported (

v1.26.xtov1.28.x). You must upgrade one Kubernetes minor version at a time (v1.26.xtov1.27.xtov1.28.x). - Kubernetes control plane and worker nodes: As of Kubernetes v1.28, worker nodes can be up to 3 minor versions lower than the Kubernetes control plane minor version. In earlier Kubernetes versions, worker nodes could be up to 2 minor versions lower than the Kubernetes control plane minor version.

2.7 -

Currently EKS Anywhere does not support updating vSphere credential when upgrading cluster with eksctl anywhere upgrade command. There are two ways to perform a vsphere credentials update:

- Manually update all vSphere credentials in related Secret objects, follow the steps below:

- Update

EKSA_VSPHERE_PASSWORDenvironment variable to the new password and get the base64 encoded string of the password usingecho -n "<YOUR_PASSWORD>" | base64 - Update the following secrets in your vSphere cluster using

kubectl editcommand:{CLUSTER_NAME}-vsphere-credentialsundereksa-systemnamespace - Updatepasswordfield under data.{CLUSTER_NAME}-cloud-provider-vsphere-credentialsundereksa-systemnamespace - Decode the string under data, in the decoded string (which is the template for Secret objectcloud-provider-vsphere-credentialunderkube-systemnamespace), update the{CLUSTER_NAME}.passwordwith the base64 encoding of new password, then encode the string and update data field with the encoded string.vsphere-credentialsundereksa-systemnamespace - Updatepassword,passwordCP,passwordCSIfield under data.- If annotation

kubectl.kubernetes.io/last-applied-configurationexists on any of the above Secret object, update password inkubectl.kubernetes.io/last-applied-configurationfield. {CLUSTER_NAME}-csi-vsphere-configundereksa-systemnamespace - If annotationkubectl.kubernetes.io/last-applied-configurationexists on the secret object, update password inkubectl.kubernetes.io/last-applied-configurationfield.

- Only update Secret

vsphere-credentialsundereksa-systemnamespace then trigger a full EKS-A CAPI cluster upgrade by modifying the cluster spec:

- Update

EKSA_VSPHERE_PASSWORDenvironment variable to the new password and get the base64 encoded string of the password usingecho -n "<YOUR_PASSWORD>" | base64 - Update secret

vsphere-credentialsundereksa-systemnamespace - Updatepassword,passwordCP,passwordCSIfield under data and inkubectl.kubernetes.io/last-applied-configurationif annotation exists. - Modify any field in the cluster config file and then run

eksctl anywhere upgrade cluster -f <cluster-config-file>to trigger a full cluster upgrade. This will automatically apply the new credentials to all related secrets.

3 - Scale cluster

3.1 - Scale Bare Metal cluster

Scaling nodes on Bare Metal clusters

When you are horizontally scaling your Bare Metal EKS Anywhere cluster, consider the number of nodes you need for your control plane and for your data plane.

See the Kubernetes Components documentation to learn the differences between the control plane and the data plane (worker nodes).

Horizontally scaling the cluster is done by increasing the number for the control plane or worker node groups under the Cluster specification.

NOTE: If etcd is running on your control plane (the default configuration) you should scale your control plane in odd numbers (3, 5, 7…).

apiVersion: anywhere.eks.amazonaws.com/v1

kind: Cluster

metadata:

name: test-cluster

spec:

controlPlaneConfiguration:

count: 1 # increase this number to horizontally scale your control plane

...

workerNodeGroupConfigurations:

- count: 1 # increase this number to horizontally scale your data plane

Next, you must ensure you have enough available hardware for the scale-up operation to function. Available hardware could have been fed to the cluster as extra hardware during a prior create command, or could be fed to the cluster during the scale-up process by providing the hardware CSV file to the upgrade cluster command (explained in detail below). For scale-down operation, you can skip directly to the upgrade cluster command .

To check if you have enough available hardware for scale up, you can use the kubectl command below to check if there are hardware with the selector labels corresponding to the controlplane/worker node group and without the ownerName label.

kubectl get hardware -n eksa-system --show-labels

For example, if you want to scale a worker node group with selector label type=worker-group-1, then you must have an additional hardware object in your cluster with the label type=worker-group-1 that doesn’t have the ownerName label.

In the command shown below, eksa-worker2 matches the selector label and it doesn’t have the ownerName label. Thus, it can be used to scale up worker-group-1 by 1.

kubectl get hardware -n eksa-system --show-labels

NAME STATE LABELS

eksa-controlplane type=controlplane,v1alpha1.tinkerbell.org/ownerName=abhnvp-control-plane-template-1656427179688-9rm5f,v1alpha1.tinkerbell.org/ownerNamespace=eksa-system

eksa-worker1 type=worker-group-1,v1alpha1.tinkerbell.org/ownerName=abhnvp-md-0-1656427179689-9fqnx,v1alpha1.tinkerbell.org/ownerNamespace=eksa-system

eksa-worker2 type=worker-group-1

If you don’t have any available hardware that match this requirement in the cluster, you can setup a new hardware CSV . You can feed this hardware inventory file during the upgrade cluster command .

Upgrade Cluster Command for Scale Up/Down

-

eksctl CLI: To upgrade a workload cluster with eksctl, run:

eksctl anywhere upgrade cluster -f cluster.yaml # --hardware-csv <hardware.csv> \ # uncomment to add more hardware --kubeconfig mgmt/mgmt-eks-a-cluster.kubeconfigAs noted earlier, adding the

--kubeconfigoption tellseksctlto use the management cluster identified by that kubeconfig file to create a different workload cluster. -

kubectl CLI: The cluster lifecycle feature lets you use kubectl to talk to the Kubernetes API to upgrade a workload cluster. To use kubectl, run:

kubectl apply -f eksa-w01-cluster.yaml --kubeconfig mgmt/mgmt-eks-a-cluster.kubeconfigTo check the state of a cluster managed with the cluster lifecyle feature, use

kubectlto show the cluster object with its status.The

statusfield on the cluster object field holds information about the current state of the cluster.kubectl get clusters w01 -o yamlThe cluster has been fully upgraded once the status of the

Readycondition is markedTrue. See the cluster status guide for more information. -

GitOps: See Manage separate workload clusters with GitOps

-

Terraform: See Manage separate workload clusters with Terraform

NOTE:For kubectl, GitOps and Terraform:

- The baremetal controller does not support scaling upgrades and Kubernetes version upgrades in the same request.

- While scaling workload cluster if you need to add additional machines, run:

eksctl anywhere generate hardware -z updated-hardware.csv > updated-hardware.yaml kubectl apply -f updated-hardware.yaml - For scaling multiple workload clusters, it is essential that the hardware that will be used for scaling up clusters has labels and selectors that are unique to the target workload cluster. For instance, for an EKSA cluster named

eksa-workload1, the hardware that is assigned for this cluster should have labels that are only going to be used for this cluster liketype=eksa-workload1-cpandtype=eksa-workload1-worker. Another workload cluster namedeksa-workload2can have labels liketype=eksa-workload2-cpandtype=eksa-workload2-worker. Please note that even though labels can be arbitrary, they need to be unique for each workload cluster. Not specifying unique cluster labels can cause cluster upgrades to behave in unexpected ways which may lead to unsuccessful upgrades and unstable clusters.

Selective Server Removal During Scale Down

When scaling down your Bare Metal EKS Anywhere cluster, you may want to remove a specific server rather than letting the system automatically choose which node to remove. By default, Cluster API will remove nodes in a non-deterministic order during scale down operations.

IMPORTANT: Do not attempt to remove specific servers by deleting entries from the hardware CSV file and running the upgrade command. This approach will not have deterministic behavior.

To target a specific server for removal during scale down, use the Cluster API delete annotation on the corresponding machine resource. This ensures that the specific machine is prioritized for removal during the scale-down operation.

Steps to Remove a Specific Server

-

Identify the target node: First, determine which node you want to remove by listing all nodes in your cluster:

kubectl get nodes -

Find the corresponding CAPI machine: List all CAPI machines to find the machine resource that corresponds to your target node:

kubectl get machines.cluster.x-k8s.io -n eksa-systemYou can also find the specific machine for a node using:

NODE_NAME="your-target-node-name" kubectl get machines.cluster.x-k8s.io -n eksa-system --no-headers | awk -v node="$NODE_NAME" '$3==node {print $1}' -

Add the delete annotation: Mark the machine for deletion by adding the Cluster API delete annotation:

MACHINE_NAME="your-machine-name" kubectl annotate machines.cluster.x-k8s.io $MACHINE_NAME cluster.x-k8s.io/delete-machine=yes -n eksa-system -

Update your cluster specification: Reduce the node count in your EKS Anywhere cluster specification file:

apiVersion: anywhere.eks.amazonaws.com/v1 kind: Cluster metadata: name: test-cluster spec: workerNodeGroupConfigurations: - count: 2 # decrease this number for scale down -

Apply the scale down operation: Run the upgrade command to perform the scale down:

eksctl anywhere upgrade cluster -f cluster.yaml --kubeconfig mgmt/mgmt-eks-a-cluster.kubeconfig

The annotated machine will be prioritized for removal during the scale-down operation, ensuring that your specific target server is removed from the cluster.

NOTE: The hardware CSV is designed to manage the hardware catalog by creating Hardware , Machine , and BMC credential resources. For operational tasks like scaling, always use the cluster specification count and CAPI machine annotations rather than modifying the hardware CSV.

Autoscaling

EKS Anywhere supports autoscaling of worker node groups using the Kubernetes Cluster Autoscaler and as a curated package .

See here and as a curated package for details on how to configure your cluster spec to autoscale worker node groups for autoscaling.

3.2 - Scale CloudStack cluster

When you are scaling your CloudStack EKS Anywhere cluster, consider the number of nodes you need for your control plane and for your data plane. In each case you can scale the cluster manually or automatically.

See the Kubernetes Components documentation to learn the differences between the control plane and the data plane (worker nodes).

Manual cluster scaling

NOTE: If etcd is running on your control plane (the default configuration) you should scale your control plane in odd numbers (3, 5, 7, and so on).

apiVersion: anywhere.eks.amazonaws.com/v1

kind: Cluster

metadata:

name: test-cluster

spec:

controlPlaneConfiguration:

count: 1 # increase this number to horizontally scale your control plane

...

workerNodeGroupConfigurations:

- count: 1 # increase this number to horizontally scale your data plane

Once you have made configuration updates, you can use eksctl, kubectl, GitOps, or Terraform specified in the upgrade cluster command

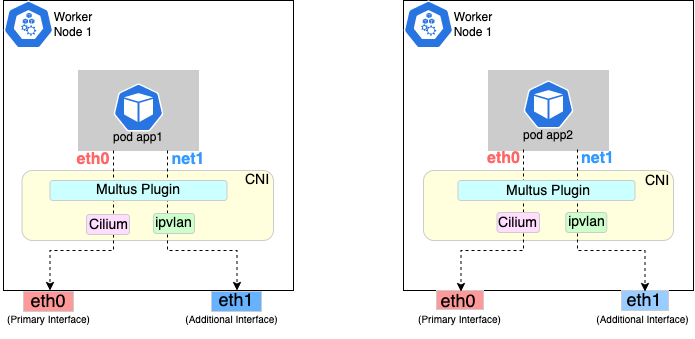

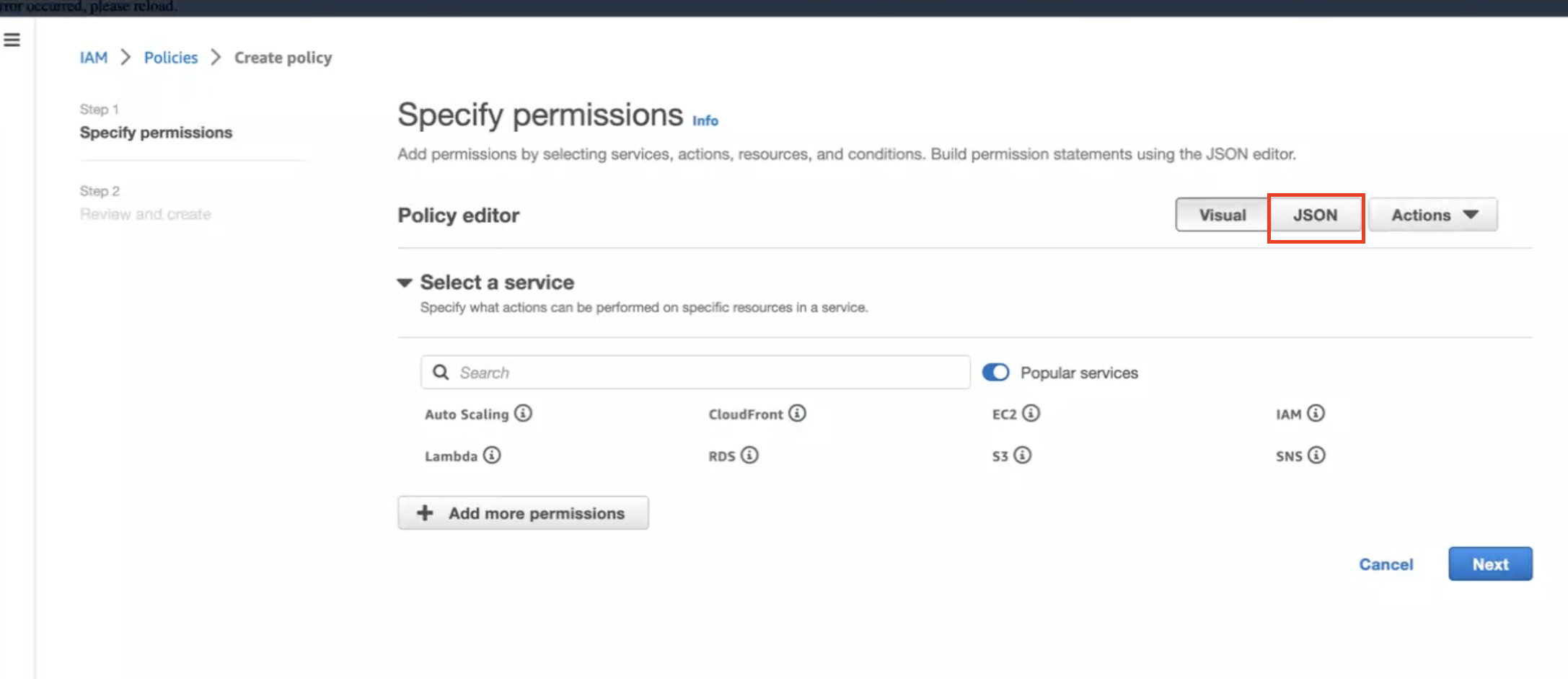

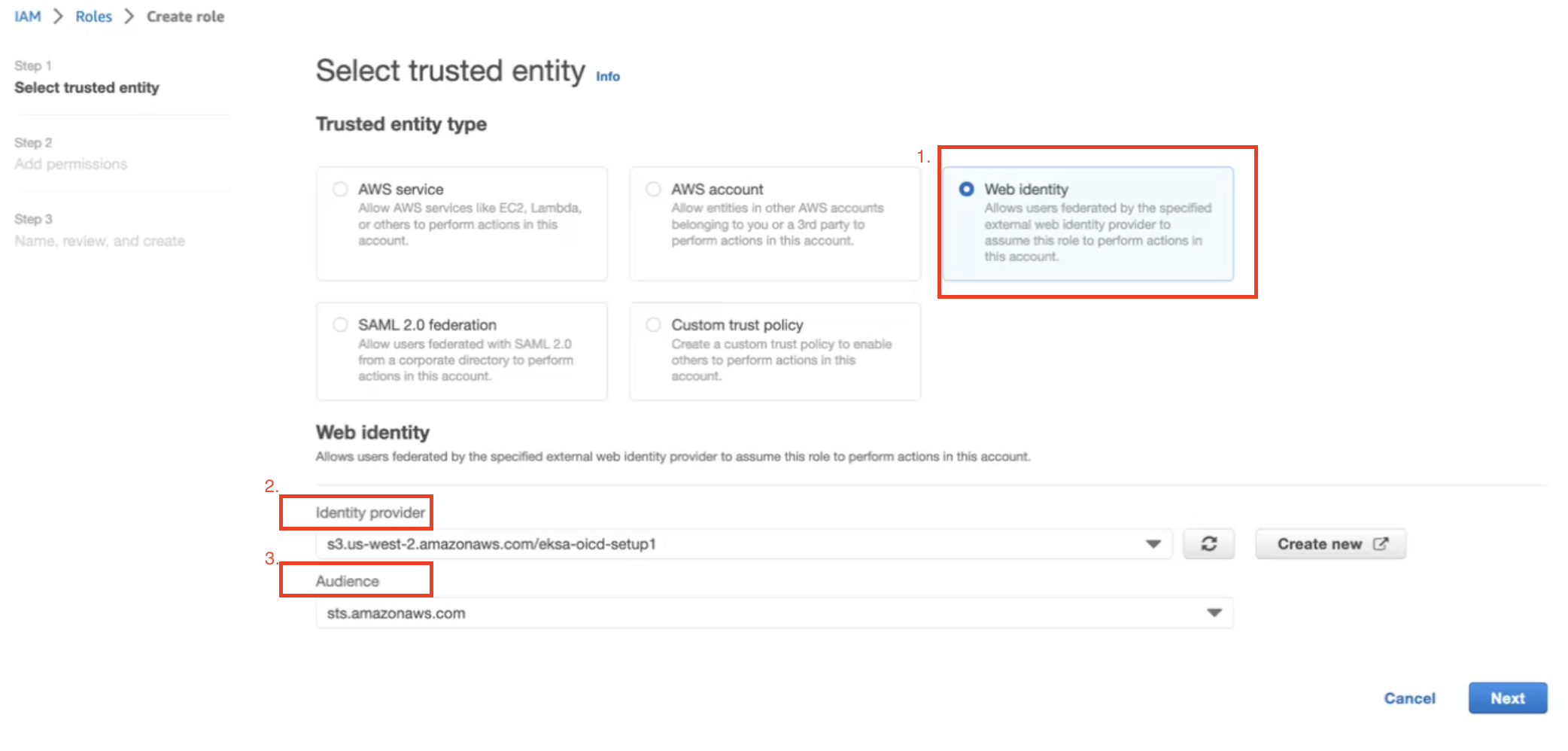

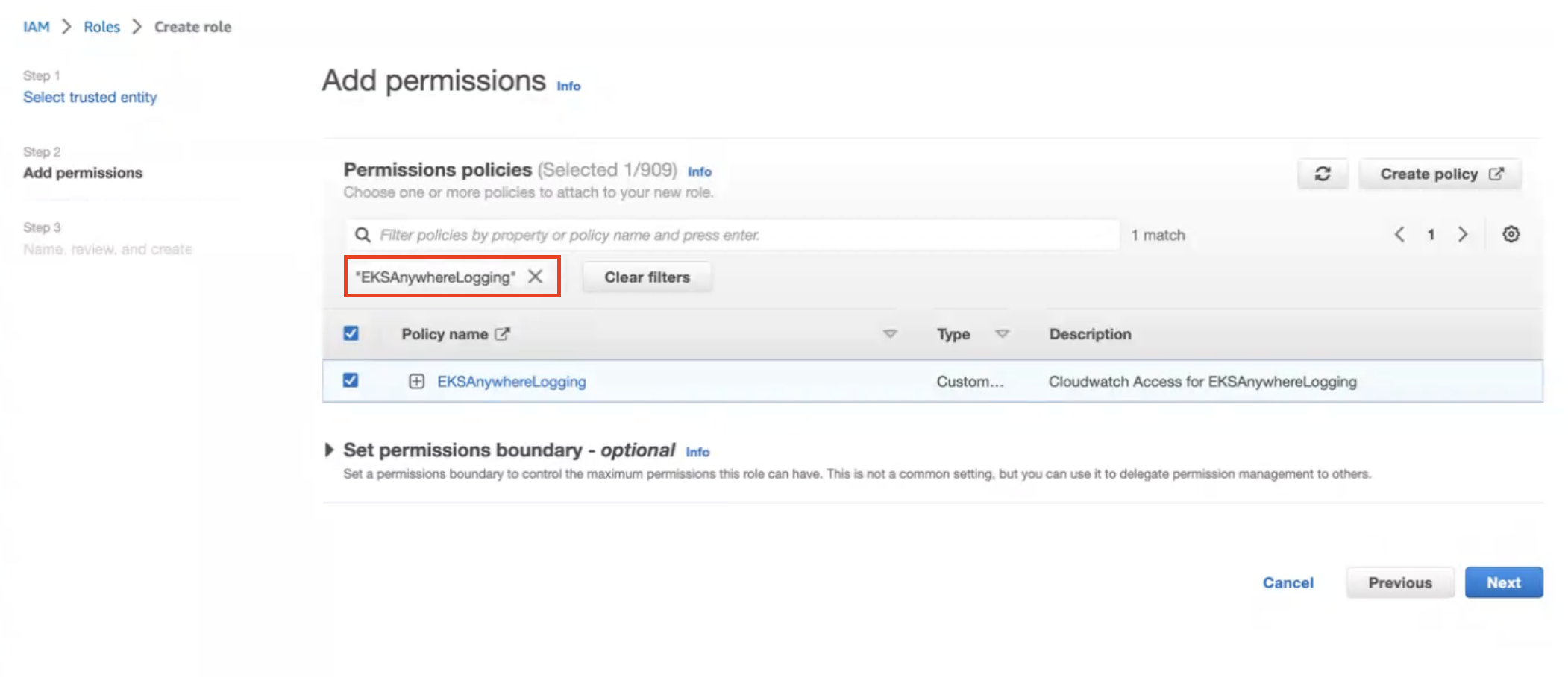

to apply those changes.